This post is going to be a bit different than my usual fare in that some of it will be written by an AI (so much like 90% of the web these days!). In fact, I started out this morning wanting to know more about semantic search and the use of large language models to create embeddings for use in search thinking I’d probably spend much of the week looking into this. But then I had a great idea, why not ask a large language model how to do it? It turns out it wasn’t quite that easy, but within a couple of hours I had a working POC which I’ll share later.

So AI is all the rage at the moment, mostly due to advances pioneered by the likes of Google and OpenAI building on the work done by researchers over decades. I won’t go too much into large language models here or how they work (mostly because I don’t have a clue!) but suffice it to say that with a bit of tinkering it’s now possible to run pretty decent models on consumer level hardware. I have a ‘server’ that I built to run this stuff (a threadripper 2920 with an Nvidia 3070ti for inference) and run ollama on it for local Large language models. So, I started off by asking a model (mistral-nemo) a simple question:

I want to investigate the use of embeddings for semantic search, can you help with that?

And it replied:

Absolutely, I'd be happy to help you explore the use of embeddings for semantic search! Embeddings are dense vector representations of text or other data that capture semantic meaning and enable tasks like semantic search. Here's an outline of steps we can take to investigate this topic:

1. **Understanding embeddings**:

* Start by explaining what embeddings are, how they work, and why they're useful for semantic search.

* Discuss different types of embeddings, such as:

+ Word embeddings (Word2Vec, GloVe)

+ Sentence embeddings (Sentence-BERT, Universal Sentence Encoder)

+ Contextualized embeddings (ELMo, BERT)

* Explain the difference between count-based and neural network-based embedding methods.

2. **Dimensionality**:

* Discuss the concept of dimensionality in embeddings and its impact on semantic search performance.

* Explore techniques like Principal Component Analysis (PCA) or t-SNE for visualizing high-dimensional embeddings.

3. **Evaluation metrics**:

* Explain evaluation metrics used for semantic search, such as:

+ Mean Reciprocal Rank (MRR)

+ Normalized Discounted Cumulative Gain (NDCG)

+ Recall@k

4. **Embedding databases and indexes**:

* Discuss efficient storage and indexing techniques for embeddings, like:

+ Inverted File Indexing (IVF)

+ Hierarchical Navigable Small World (HNSW) graphs

+ Approximate Nearest Neighbor (ANN) search algorithms

5. **Practical implementation**:

* Guide you through creating a simple semantic search engine using embeddings, such as:

1. Choosing and preprocessing your dataset.

2. Generating embeddings for your data.

3. Indexing the embeddings for efficient search.

4. Implementing a search function that takes queries and returns relevant results based on embedding similarity.

6. **Advanced topics**:

* Explore more advanced techniques like:

+ Embedding fine-tuning for specific domains or tasks.

+ Using transformer-based models (like BERT, RoBERTa) for high-quality embeddings.

+ Combining embeddings with other features or algorithms for improved search performance.

Throughout this investigation, we can use relevant resources like research papers, tutorials, and open-source libraries to help illustrate concepts and provide practical examples. Here are some resources to get us started:

* "Word Embeddings: A Simple Introduction" by Standford University: <https://web.stanford.edu/class/cs224n/handouts/wordvecs.pdf>

* Sentence-BERT (SBERT) tutorial: <https://www.sbert.net/docs/quick_start.html>

* Faiss (Facebook AI Similarity Search): <https://github.com/facebookresearch/faiss>

* Annoy (Approximate Nearest Neighbors Oh Yeah): <https://github.com/spotify/annoy>

We had a bit of back and forth to explore this a bit further, with the model taking me through what embeddings actually are:

What are embeddings?

Embeddings are dense vector representations of text or other data that capture semantic meaning. In simpler terms, an embedding is a way to represent complex information (like words, sentences, or images) in a format that machines can understand and process efficiently. Embeddings allow us to perform tasks like similarity measurement, clustering, and classification using techniques from linear algebra and machine learning.

How do embeddings work?

Embeddings are created using algorithms that learn the relationships between data points in a high-dimensional space. For text data, these algorithms analyze large amounts of text corpora to understand how words or phrases relate to one another based on their context and meaning.

Here's an example to illustrate this:

Consider the following sentences:

"The cat sat on the mat."

"The dog sat on the rug."

In both cases, the animals sit on objects with similar meanings ("mat" and "rug"). A well-trained embedding model should capture this relationship by placing these words close together in the embedding space.

And then eventually, after a bit more information about different types of embedding and how to use them, I got to implementation:

“Okay, I’m interested in a practical implementation. I have a wordpress database, with posts stored in mariadb. I have access to ollama which runs various models and can be access via an openai compatible API. This includes embedding models such as mxbai-embed-large. I also have access to chromaDB for storing vector data. Could you walk me through creating a proof of concept of a semantic search engine?”

The code the model produced was…..not great. It used out of date methods for getting data, didn’t even suggest using the python libraries for chroma or ollama that exist and had some weird issues with adding too many closing brackets to statements. Maybe if I’d used a more code focussed model I’d have had better results.

For example, here’s the code I was given for generating embeddings:

import requests

import json

def get_ollama_embedding(text):

response = requests.post(

"http://localhost:7868/api/embeddings",

headers={"Content-Type": "application/json"},

data=json.dumps({"text": text, "model": "mxbai-embed-large"}),

)

return response.json()["data"][0]["vector"]

# Assuming posts are loaded from posts.json

posts = json.load(open("posts.json"))

embeddings = []

for post in posts:

title_embedding = get_ollama_embedding(post["title"]["rendered"]).tolist()

content_embedding = get_ollama_embedding(post["content"]["rendered"]).tolist()

embeddings.append({

"post_id": post["id"],

"title_embedding": title_embedding,

"content_embedding": content_embedding,

})And here’s what I had after a bit of googling, adding the right libraries etc:

from typing import Dict

import requests

import json

import ollama

from ollama import Client

import chromadb

posts = json.load(open("posts.json"))

client = Client(host='http://localhost:11434')

embeddings = []

for post in posts:

print(post['title'])

title_embedding = client.embeddings(

model='mxbai-embed-large',

prompt=post['title']

)

content_embedding = client.embeddings(

model='mxbai-embed-large',

prompt=post['content']

)

embeddings.append({

"post_id": post["id"],

"post_title": post["title"],

"content_embedding": content_embedding['embedding'],

"title_embedding": title_embedding['embedding']

})

client = chromadb.HttpClient(host="localhost",port="8100")

collection_name = "wordpress_posts"

collection = client.get_or_create_collection(name=collection_name,metadata={'hnsw:space':'cosine'})

for embedding in embeddings:

collection.upsert(

documents=[embedding['post_title']],

ids=[embedding['post_id']],

embeddings=[embedding["content_embedding"]],

)And my search code:

from typing import Dict

import requests

import json

import ollama

from ollama import Client

import chromadb

import argparse

chroma_client = chromadb.HttpClient(host="localhost",port="8100")

ollama_client = Client(host='localhost:11434')

collection_name = "wordpress_posts"

collection = chroma_client.get_collection(collection_name)

def search(query, n_results=10):

query_embedding = ollama_client.embeddings(

model='mxbai-embed-large',

prompt=query

)

results = collection.query(

query_embeddings=query_embedding["embedding"],

)

return results

#return [{"post_id": result["metadatas"][0]["id"], "score": result.score} for result in results]

# Example usage:

parser = argparse.ArgumentParser(description="A simple example of using argparse.")

parser.add_argument("querystring", help="A query string", type=str)

args = parser.parse_args()

search_results = search(args.querystring)

loop = 0

for document in search_results["documents"][0]:

if search_results["distances"][0][loop] <= 0.5:

print(document)

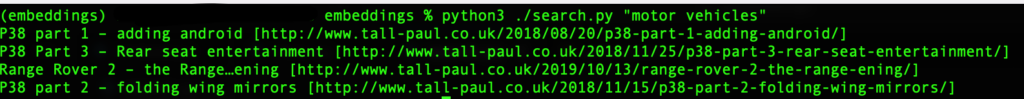

loop = loop+1And here’s the output from that code with the posts on this site as a dataset. Note that none of the posts found by the search contain the searchterm itself:

This was just a simple POC, but it works pretty well and to go from knowing very little about embeddings or how all this works to something that works at all in a couple of hours has been pretty eye opening.